A joint event by OCS and NeiroHUB, dedicated to the topic of artificial intelligence and the challenges of its use, using real business tasks as examples. With the advent of AI, completely new opportunities have opened up for humans. Many companies are already beginning to realize the potential of AI and are learning to use it to optimize employee performance in various fields.

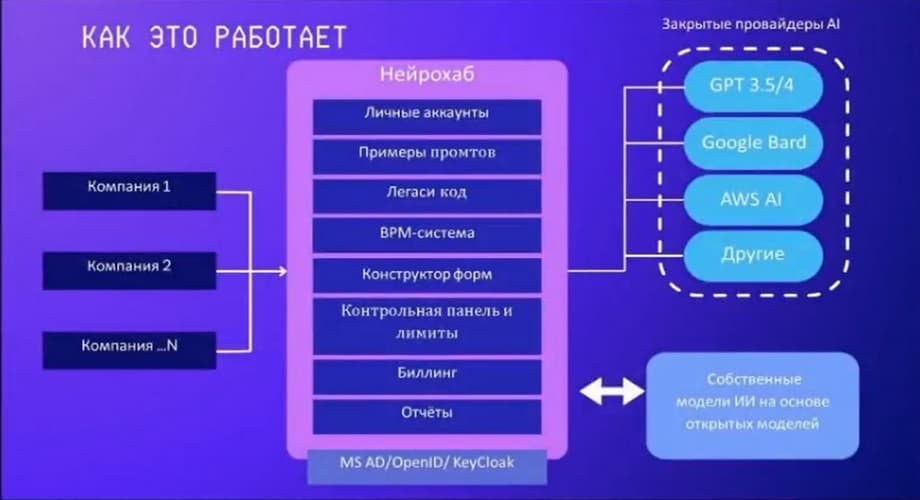

To help business integrate this technology into the existing corporate structure and avoid the risks associated with it, a platform has been created that makes AI easy to use for corporate purposes, ensures data confidentiality, billing transparency, and control over current processes.

Today's program includes:

- The challenges of using AI in real business tasks.

- Is the access platform something new?

- The effectiveness of the platform for business in numbers.

Today's event is led by Svyatoslav Bagriy, Head of Partner Network Development at AGORA. This meeting is dedicated to the use of artificial intelligence for business. Today, we will examine how companies and modern businesses can conduct their business processes and effectively use artificial intelligence resources to solve their real business tasks.

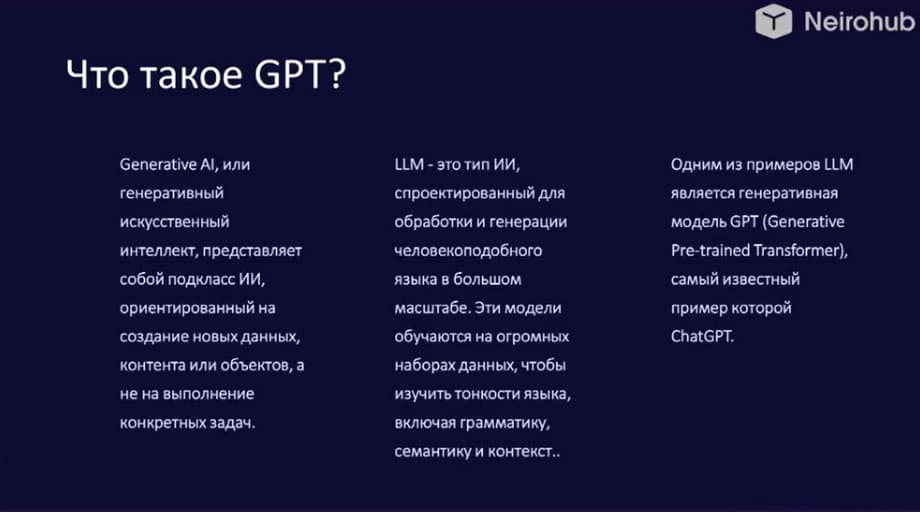

Let's talk a little about history. What is GPT? GPT stands for Generative Pre-trained Transformer. It was developed by a team of researchers at OpenAI. It all started in 2018 when they introduced GPT-1. This was followed by improved versions of the model, such as GPT-2 and GPT-3. GPT itself has attracted attention for its ability to generate human-like text using machine learning and neural network mechanisms.

LLM is a Large Language Model. It is a language model used to assess the probability of a sequence of words in natural language. It is trained to predict the next words in a text based on the previous words. LLMs are commonly used in various natural language processing tasks, including text generation and understanding natural language communication. One of the most prominent and well-known examples of an LLM is the generative model GPT, better known as ChatGPT.

The most well-known LLMs today are presented on the slide above. There are two groups: those with open access to the code and those with closed access. How did ChatGPT appear when GPT was not yet well known? They simply combined the capabilities of using GPT itself and the queries that can be given to GPT through a chat. That is, the combination of two known formats - a generative model and communication through chat - gave birth to ChatGPT. And this gave a fairly rapid growth in popularity for the use of artificial intelligence.

What the use of artificial intelligence brings to business today. It is estimated and measured that the increase in the efficiency of business processes can reach up to 43%. That is, this is such a large and serious indicator that even those companies that are still only looking at the use of artificial intelligence in their business processes should think about it. The most important thing that the use of AI provides is an increase in the efficiency and productivity of employees, a more qualitative use of employee time, that is, freeing up the time that was previously used for some routine tasks. They can now be given to artificial intelligence, which will solve and spend this time for the employee. And this will be a very, very cheap resource. And, in fact, the very change in interaction with the Internet and with IT solutions, this is all brought by artificial intelligence.

The problems that businesses and companies currently face in using artificial intelligence are quite broad. Today, we highlight 6 points. Uncontrolled access to corporate artificial intelligence accounts. So, who has access to these accounts? Is it the employees themselves, or are they employees of an authorized company who grant access rights to other employees? According to what scenario are these accesses and artificial intelligence resources used? What is allowed for corporate frontends? These are the questions that arise when we try to control the use of artificial intelligence. Also, shadow use. These are the risks that employees will use them for their own purposes, for tasks that are not solved for the company, but for, perhaps, some third-party projects. That is, the company provides a resource and cannot control its operation and the costs for it. Outdated, inefficient processes for working with artificial intelligence. In every company, it is 100% certain that any business can carefully review its business processes and see that with historical development, some inefficient processes and outdated business processes appear. These processes can be optimized, and from 10 to 40% of efficiency can be introduced if these tasks are solved with the help of artificial intelligence. Expenses. How to control the costs of artificial intelligence, especially if each employee who uses it has their own account. That is, they need to be paid for somehow. It's all quite opaque.

That is, opaque billing and fairly unpredictable expenses are also a serious problem for a business that is looking towards using artificial intelligence but is not taking that step. Different APIs serve different purposes, but, in fact, in order to use different networks, different artificial intelligences, different LLMs, you need to connect to each of them. If there is a single entry window, a single hub, then it is much faster and easier to do this, and you do not need to support each connection separately. The absence of such a hub is also a problem. Well, and actually concluding a contract with suppliers of artificial intelligence is now a problem. If we are talking specifically about those models that were listed earlier, that is, these are not Russian providers that are appearing, but there are not very many of them now. The main capabilities of these models are outside the possibilities of quickly concluding an agreement with an artificial intelligence provider.

Today we wanted to present you with a new platform for accessing and managing corporate artificial intelligence, Neirohub.

With the advent of artificial intelligence, completely new opportunities have opened up for humans. Many companies are already beginning to realize the potential of artificial intelligence and are learning to use it to optimize employee performance in various fields. To help businesses integrate this technology into the existing corporate structure and avoid the risks associated with it, we have developed the Neirohub platform, which makes artificial intelligence easy to use for corporate purposes, ensures the confidentiality of important data, billing transparency, and control over current processes. Take artificial intelligence under control. Today we will tell you how it works and how you can use it.

Neirohub is a single online access platform. It contains a set of services and a user-friendly interface for personal accounts with examples of prompts, a form builder, reports, and billing in a single window. The company connects to the platform as a service. There can be many such companies, and in turn, the platform is already connected to most of the necessary language models. The key features of the platform that Neirohub provides are flexibility.

You can choose artificial intelligence from different providers and customize solutions using the API. This is control. Set roles and access rights in accordance with company policies. This is security. Use middleware to protect corporate data.

Billing in a single window. Include services from various providers and make calculations in a single system. Multifunctionality allows you to use all modern features and keep up with the times. Teamwork through the platform allows you to unite into teams with access restrictions.

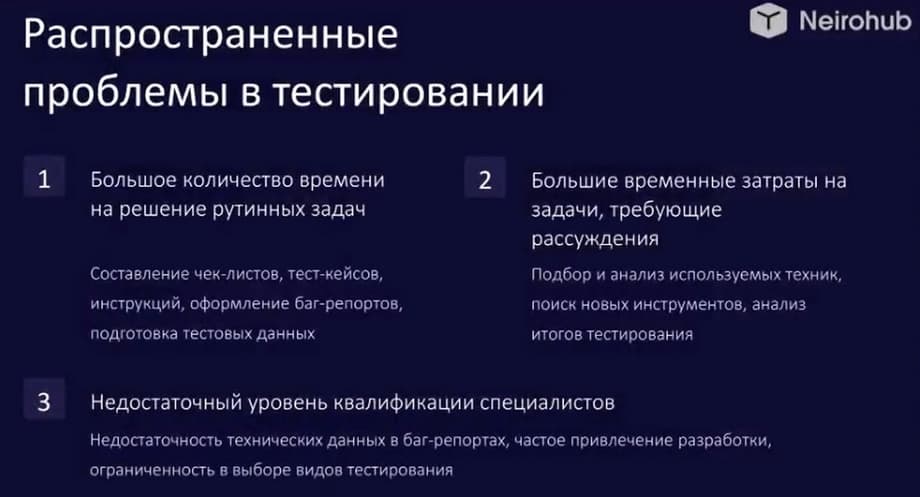

Now let's move on to practical cases. We will look at different examples for the work of different departments. This is the use of artificial intelligence for the testing department. In this block, we will look at the main problems that testing faces and options for solving them with the help of a neural network. Let's start with the problems. At the moment, we have a huge number of fairly extensive blocks. Perhaps the most significant of them is the large amount of time spent on routine tasks.

If we have long been able to speed up direct manual testing through automation, then the remaining block of preparatory and finalizing work, which exists in both manual and automated testing, which includes the compilation of test cases and checklists, a huge number of diagrams, schemes and other documentation, the preparation of test data, the design of bug reports, remains largely reducible only by artificially reducing the time. That is, this kind of activity is either not carried out at all, and we are left without documentation, or we carry it out partially, and in this case it would be better not to write at all, or 50-70% of the total amount of time is spent on testing. Yes, we have tools, opportunities to prepare, for example, templates, or to make diagrams not from scratch using some construction tools. But, nevertheless, they require quite extensive revisions, revisions and significant time costs. The next block, inextricably linked to it, which also requires a lot of time, is a task that requires reasoning and analysis.

Analysis of the techniques used, the search for new tools, the analysis of the results of testing. We all understand perfectly well that before the direct analysis, you need to prepare for it, then conduct it, prepare finalizing documents, and agree, if necessary. And this process is quite resource-intensive. Along with this, according to the results of the analysis, if we have chosen some tool and you are faced with a choice of how to implement it, there are, of course, many solutions, but most often it is either hiring a specialist or training current employees. When hiring a separate specialist, there is a risk that the tool will not suit us, and in this case, we will need to solve a greater number of problems.

If we decide to train current employees, we thereby stretch the process of implementing some new technologies. How can a neural network help us solve the listed and many other problems.

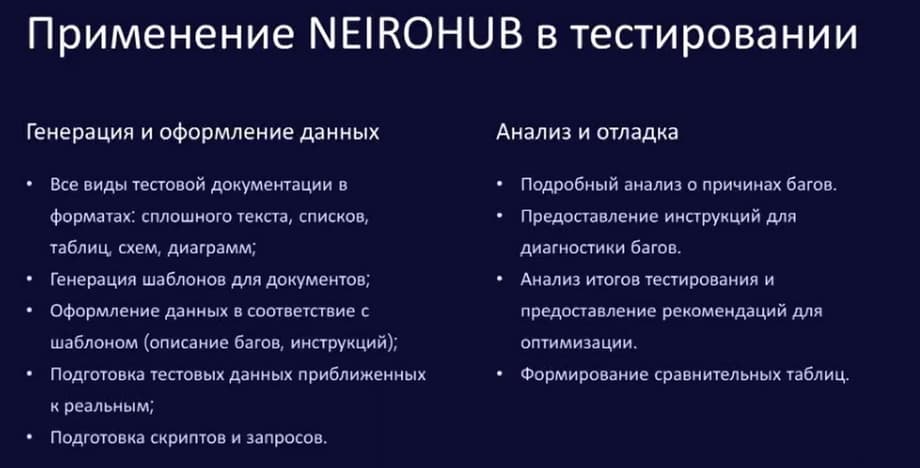

The slide shows the possibilities, but not all of them, which are able, if not completely, then to a greater extent, to cover the time costs of the previously listed activities. The neural network is able not only to generate data in various formats, but also to adapt information to templates, prepare test data, and, very importantly, close to real data. And we are all, of course, familiar with cases of preparing various scripts and requests that we need not only in automation, but will also be useful to manual testing specialists. It is also necessary to mention the possibility of providing a detailed analysis of the causes of bugs, providing additional instructions for diagnostics, conducting analyzes of test results, forming comparative tables, and much, much more. Moreover, it is important to note that all this will work not only with, conditionally, low-skilled specialists or beginners, but also with employees who have been working for quite a long time.

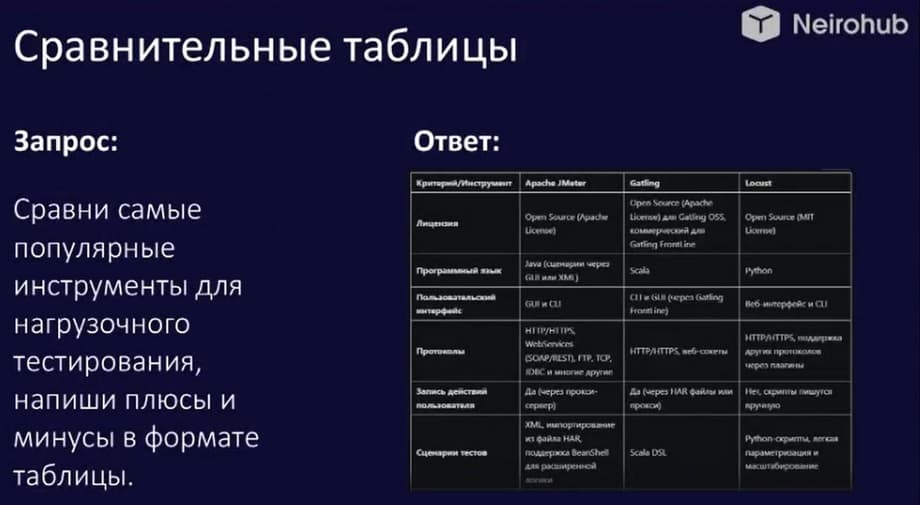

I suggest considering several cases for a better understanding of how the chat copes with certain tasks. And the very first of them is a case with a comparative table.

As I said earlier, during the analysis, it is necessary to collect all the data on the basis of which the analysis itself will be built directly. In this case, it was about the most popular tools for load testing, which had to be provided in tabular form. That is, instead of spending a couple of hours compiling a table on your own, or looking for ready-made ones or combining them in some way, we actually get information in a matter of minutes, which we will later use for analysis on our own, or also with the help of a neural network.

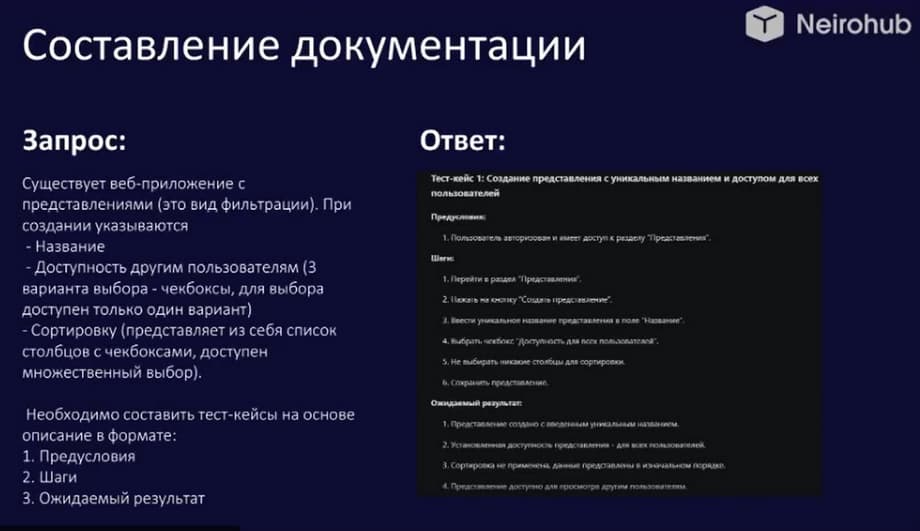

The next case is quite close, but still different, this is the compilation of documentation. In this case, it is a test-case. And unlike compiling comparative tables, here, when forming a comparative table, only the format of our report was set, and when compiling test-case's directly, we worked according to a simplified, but nevertheless template. That is, in addition to the format, we now have a template, and by giving minimal data to the application, we were able to get the volume of all the necessary test cases. Perhaps they will require some revision, because in any case, the specialist is more immersed in the subject area, but, nevertheless, we actually reduce the time for writing most of the test cases.

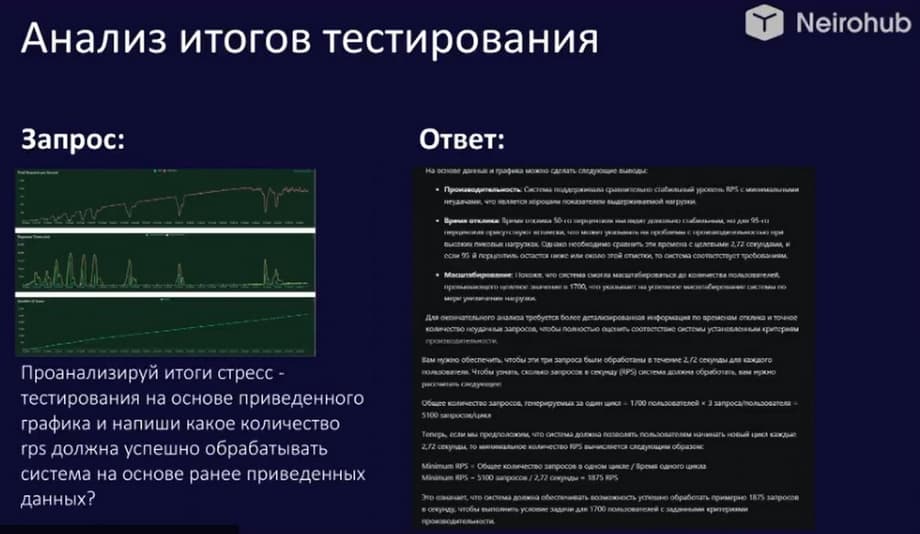

And analysis of test results. Since the neural network is able to process data from images as well, in this case, we provided a graph with the results of stress tests and a request for analysis of the results, issuing recommendations on successful processing of requests. And accordingly, the answer was a description and possible reasons for the data presented on the graph, calculations based on the results, to which conclusions can be drawn about the required load and recommendations from additional data that also need to be taken into account. When we talk about opportunities, it is certainly necessary to talk about efficiency.

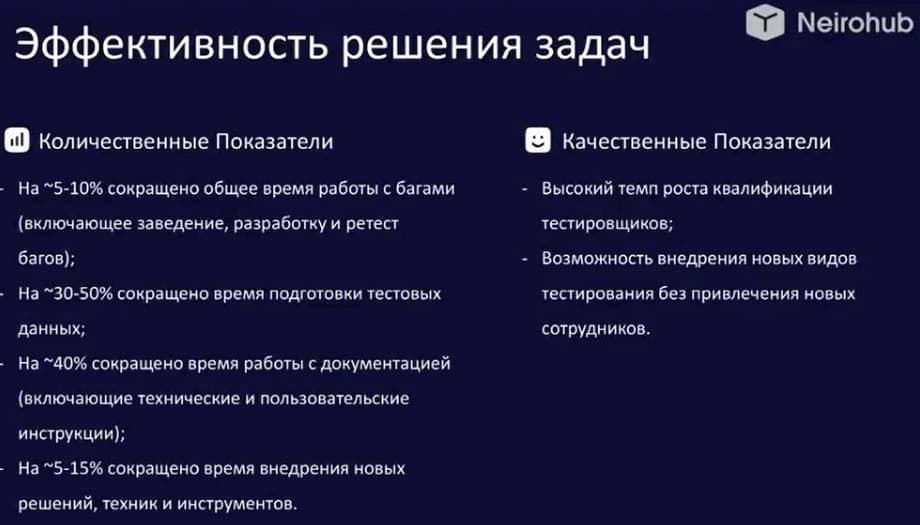

The slides show two large groups - qualitative and quantitative indicators. If we talk directly about numbers, then first of all - this is a significant reduction in time.

The largest reduction rates - 40-50% - are observed in the regular activities of the tester, that is, the preparation of test data and the preparation of documentation, which includes technical and user instructions. Smaller, but nevertheless very significant indicators, up to 15% - work with bugs and the implementation of new solutions. But here it is important to note that when calculating, for example, regarding work with bugs, the total time was taken into account for the simple reason that it is quite difficult to separate, for example, the analysis of a bug and its direct correction. It is also very difficult to divide testing and development, because in fact the analysis can be compiled by specialists from the testing department and specialists from the development department. And therefore, if we divided and talked only about testing, we would not feel these changes, simply because the reduction would have already moved to the development department, and there the time could be reduced by up to 50%, probably. Consequently, also taking into account that many processes can go in parallel. But due to the fact that many processes, including, for example, the implementation of new solutions, it can be framed by business processes that can complicate or simplify all this implementation, we can notice significantly higher indicators here. For example, if most of the implementation is occupied by communication with our colleagues, or some formalities, a bureaucratic component, then the figures will be up to 15%. In the case if we do not have such a strict bureaucratic component, then the figures can also increase up to 30%. And, of course, qualitative indicators, which are very difficult to assess, but nevertheless they are present. Qualitative indicators include a high rate of growth in the qualifications of testers, because instead of going, for example, to courses for six months, they can reduce this time due to the fact that the neural network provides them with diagnostics, and is able to analyze, and in general to issue some ready-made solutions, on which you can quickly learn both the specialist himself, and also improve the quality of the product being manufactured, produced, and the possibility of introducing new types of testing without attracting new employees and without additional risks

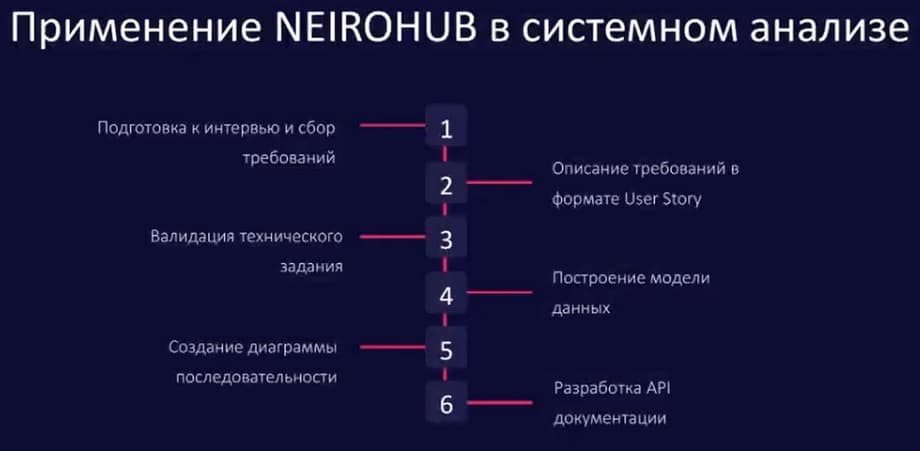

Now let's move on to the next case. Applicability for the system analysis department. Of course, we will start with preparing for interviews and collecting requirements. Let's see how you can record requirements in the User Story format, validate the technical specifications, build a data model, create sequence diagrams, or develop API documentation. I suggest analyzing each item in more detail.

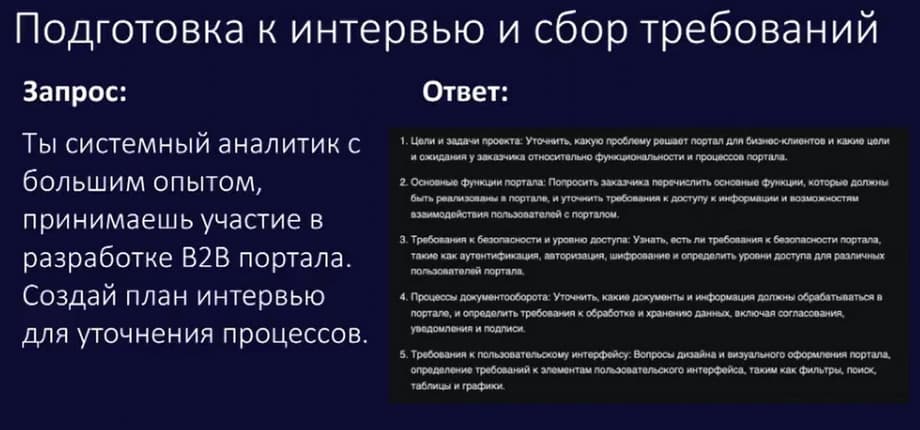

Before starting the system analysis process, it is important to properly prepare for interviews with customers or stakeholders. And in this case, the use of a neural network can greatly facilitate this process. The chat can formulate questions that can become a starting point for collecting requirements, or prepare an agenda, write a letter to the customer, help in identifying some key needs or forming initial user expectations.

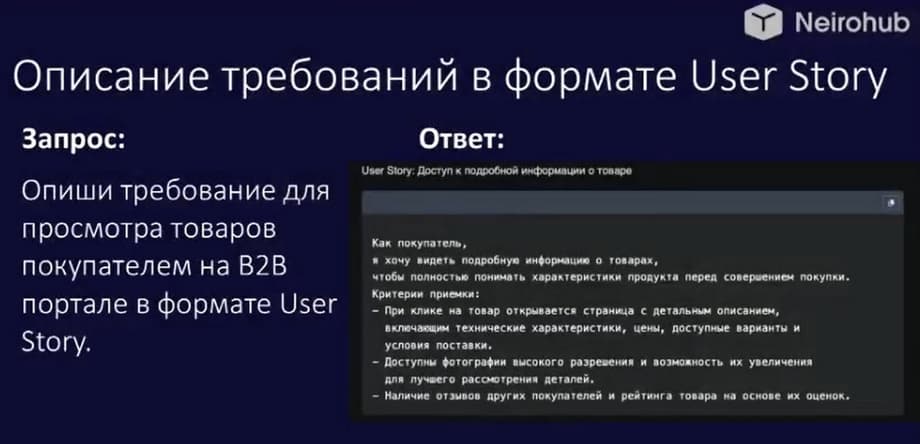

After collecting the requirements, it is necessary to structure them in such a way that they are understandable to all participants in the process. One of these formats is User Story.

Using a neural network, we can describe the requirements in the User Story format. As you can see in the slide, the neural network will return a title for the User Story and return the usual format. I, as a user, want to receive and acceptance criteria. User Story is not the only format. You can also use the network to describe the requirements in the Use Case format or simply as a list of functional or non-functional requirements.

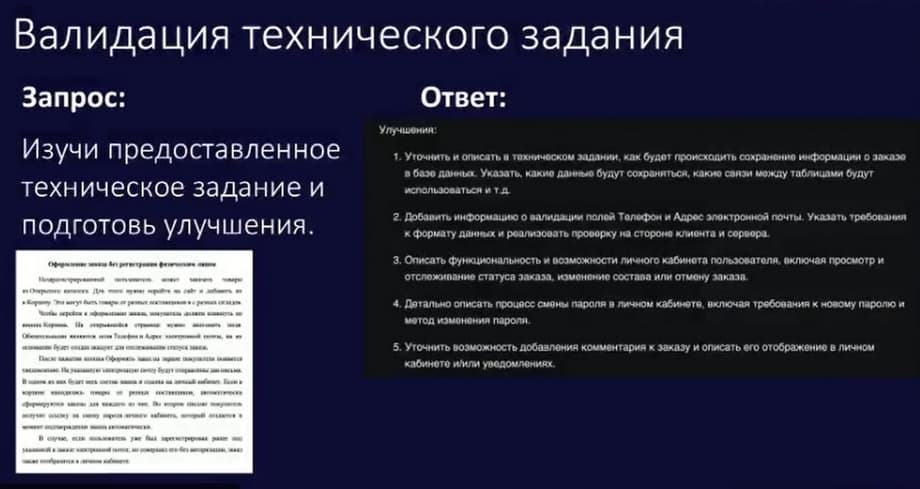

After compiling the technical specifications, it is important to validate it to ensure that all requirements are complete and met. Using the chat also to analyze the technical specifications can help identify some potential problems or contradictions at the creation stage. By setting a certain context, you can validate both from the business side and check some more business requirements, and from the technical side, where the neural network can offer you improvements within the data model or API integration, or some other parameters.

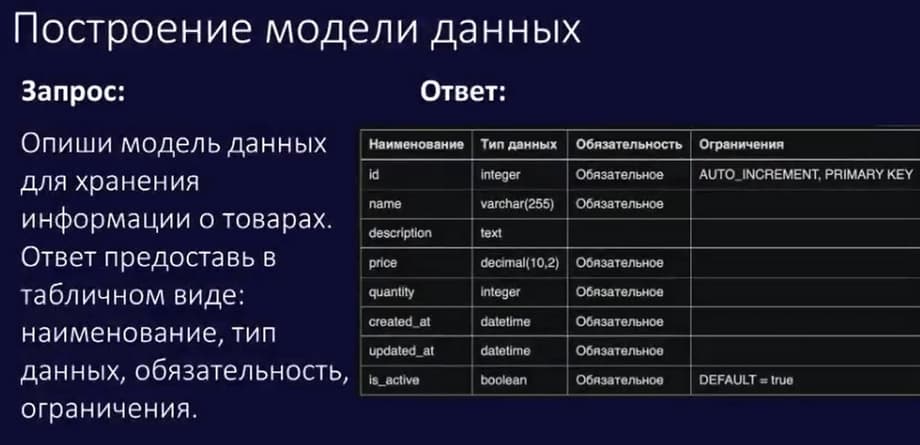

One of the key stages of system analysis is building a data model. The chat in this case can be used to analyze requirements and build a preliminary data model. In addition to the fact that you can build, highlight some entities, prescribe their attributes, the neural network will also return an answer in the form that is more familiar to you. for example, for relational databases, it is very common to describe in tabular form, as shown in the slide.

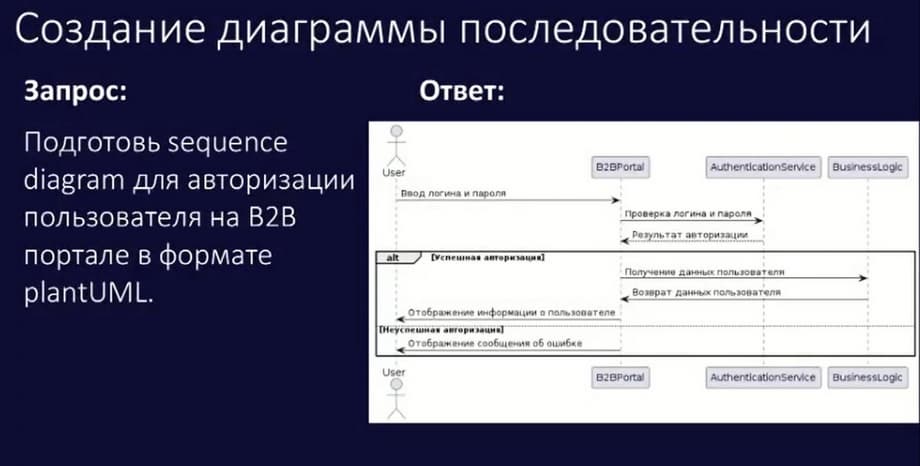

The sequence diagram plays an important role in visualizing and interacting between different systems and users. Here, the neural network can also help automatically create various types of diagrams. For example, the slide shows an example of a sequence diagram.

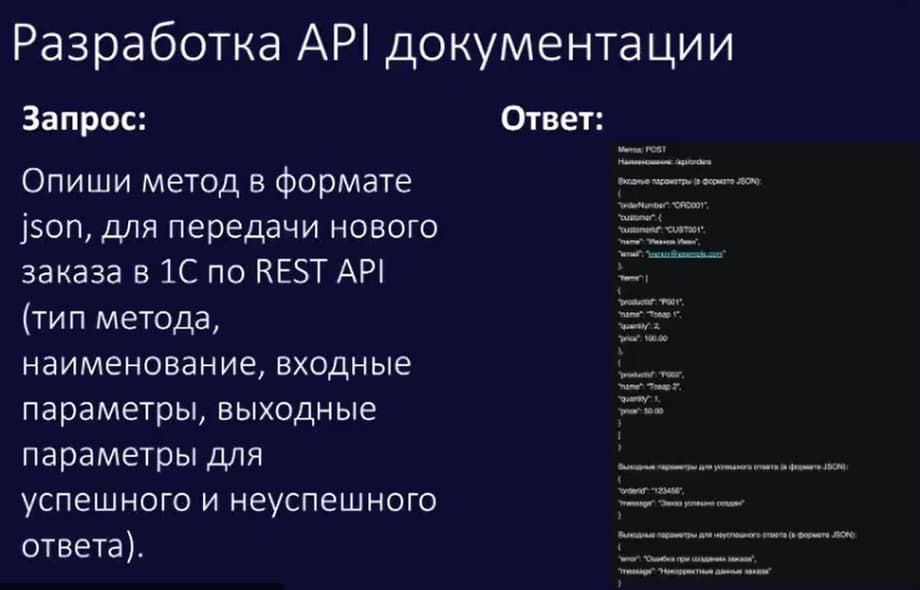

The neural network forms very well in the format for plantUML. It has its own syntax. After formation, you just need to copy the provided code, insert it into the appropriate place, and you will get the necessary diagrams. Well, and finally, for many projects, the development of API documentation is an integral part of the process.

The slide shows how the neural network formed a method for integrating 1C with a certain platform via REST API in json format. In addition to the fact that the neural network can develop API documentation, it can also validate an existing method, form entities for storing data from this method, and prescribe mappings.

We have considered the key aspects of using a neural network in system analysis, which can speed up the work of an analyst by 20 to 40%.

Now let's summarize. The main departments where you can apply Neurohub, where you can apply AI, are the technical team, the team of developers and analysts. If you have such employees, then this team will write code and test cases, draw up technical specifications and conduct preliminary analytics faster with the help of artificial intelligence. If you have marketing specialists, and they are in any company where at least 10 employees work, then they will generate creatives, generate advertising texts, use various types of artificial intelligence and get insights. The sales department, if it is not a company of one person, especially if it is complex sales, project sales, perhaps it is the sale of custom development. Actually, artificial intelligence will help to analyze customer requirements, advise on the existing functionality or properties of the product, help with navigation through the existing documentation. Technical support, which is available to a company that provides services or some service, uses artificial intelligence to navigate the documentation, advises on technical, legal, and product issues. We believe that the use of artificial intelligence is now a must-have. It's like when every company had to have a website to simply exist and be in sight, and simply declare itself as a company. Now we are moving into an era when every company uses artificial intelligence resources for its own business processes. If you are ready to implement, go to Telegram, send the word Neirahub. We will tell you, advise you in more detail what we can do, how Neurohub will help in your specific processes, we will conduct an analysis of your existing problems. Implementation takes from 1 to 3 weeks. We can customize, we can implement in different formats. This can be the use of access to the platform, as well as installation in the contour of your company with some customization or in a boxed form

Now on home