At one of the previous meetings, we analyzed the innovations in the Code of Administrative Offenses, which increased responsibility for violating the requirements for ensuring the security of personal data and introduced turnover fines for personal data leaks. Now, we are meeting to analyze technologies for protecting personal data that will prevent leaks and avoid fines.

Looking at the various options for protecting personal data available on the market, valid questions and doubts arise. Namely, systems either do not detect personal data in traffic with sufficient quality, or they literally overwhelm the information security specialist with hundreds of false positives on salary slips and signatures in emails, completely blocking legitimate business processes. If you are familiar with this situation or you are just choosing tools to protect personal data, participate in today's meeting and learn about the capabilities of InfoWatch Traffic Monitor, a Russian DLP system with specialized patented technologies for protecting personal data and the ability to verify the admissibility of the recipient. This will allow you to effectively protect personal data without compromising business processes and wasting the time of information security services on false positives. Let's discuss why personal data is the most complex information asset to protect. We will talk about InfoWatch technology for protecting personal data, which allows you to instantly find the theft of even one personal data record. We will analyze how not to block daily business processes in this case.

Today, our speaker is Alexander Klevtsov, Head of Product Development at InfoWatch and Traffic Monitor.

Today we will talk about how to protect personal data. We have a slightly provocative title: "How to protect personal data and not 'break' business processes." How to make personal data protection effective?

In the introductory part, I will remind you about the importance of protecting personal data, talk about the role of the DLP product in protecting personal data. We will talk about the problems associated with the protection of personal data and problems with DLP, why not all DLPs are equally useful. First, we will create a problem, and then we will talk about its solution and consider specific actions.

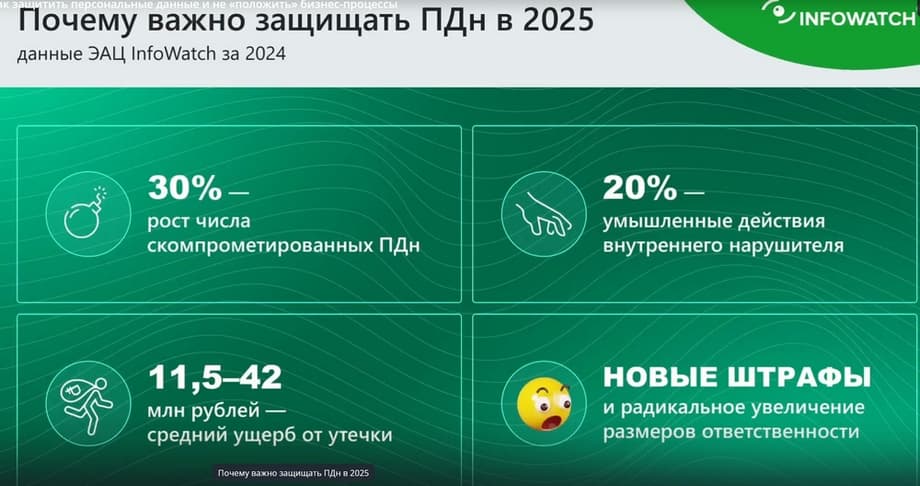

Much has been said about the importance of protecting personal data and, most importantly, protecting against personal data leaks. Problems with personal data can lead to financial losses. According to research by our InfoWatch Scientific Center together with one agency, we concluded that the average damage from a leak ranges from 11.5 to 42 million rubles.

This includes both direct and indirect damage, and fines, and the cost of investigating leaks related to personal data, and the cost of eliminating the consequences, in general, this is a complex figure. According to statistics, in 2024, the number of compromised, stolen personal data, and various kinds of leaks related to personal data increased by 30%. And I would like to emphasize separately that 20% of these leaks are intentional actions of employees, internal intruders. That is, the threat came from an employee, and he carried it out. An employee stole personal data. Of course, the legislation on fines related to the loss of personal data has become stricter.

Let's briefly analyze what measures a company needs to take to organize the protection of personal data.

This is a complex thing. It is associated with system certification, certifications, employee training, and technical measures such as providing secure communication channels, encryption, access control both at the level of systems where this personal data is stored, and at the level of the operating system, and auditing the storage locations of this data, and much, much more. What accents would you like to place here? In any company, there are always and will be employees who legitimately have access to personal data as part of their job responsibilities.

And no matter how you protect yourself from an external attacker, no matter how you organize encryption channels, access control, someone from your own employees can still steal this data. There is a standard practice, for example, in a bank, when they organize the processing of personal data in closed circuits. When they place systems critical to the business, such as ABS, in some closed circuit from which there is no access to the Internet, and thereby seem to reduce the risk of leaks of violations related to personal data. But there are always employees who have access to both workplaces and to a closed circuit, and to an open circuit with the Internet, and the potential risk of leakage always exists. That is, you need to understand that you can't do without DLP. And no matter what we do, we can't do without controlling the channels where this personal data can be transferred somewhere.

I analyzed what the market says, what the Internet tells us about the problems with protecting personal data using DLP. They say that it is enough to implement DLP, somehow audit, configure DLP, and it seems that personal data can be protected. In fact, this is not quite true. I will now express such a rough idea that DLP in its classical sense protects personal data very poorly. I'll explain why now. Because BDN is actually a heavy functional asset that is difficult to protect.

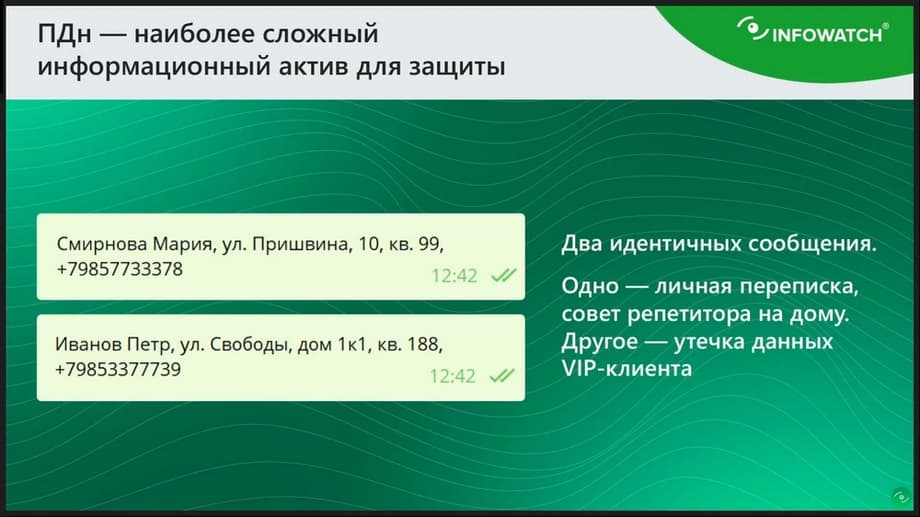

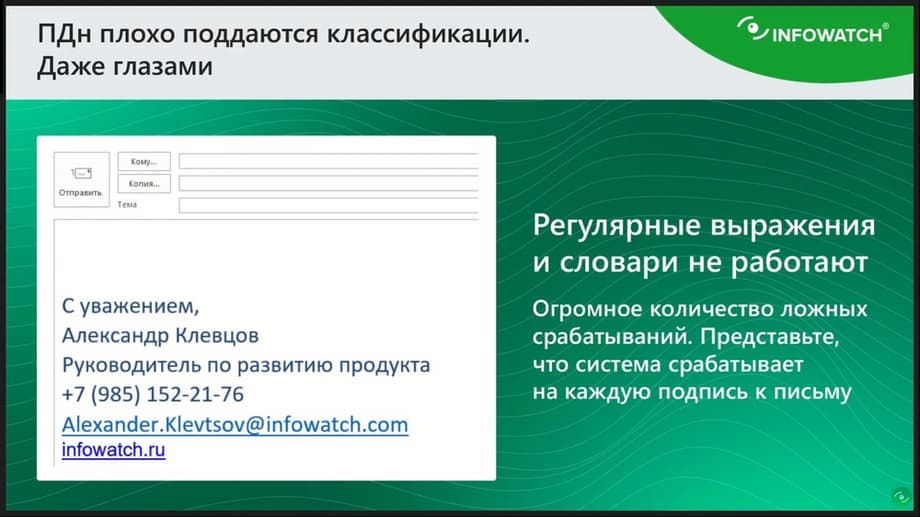

Here on the slide, please note, there is an example, there are two messages. In one of them, there is a data leak under the guise of some harmless message. And the other is just employee chatter. Two completely similar messages, but one of them is a leak, and the other is not. And this is a real-life case when in one bank, under the guise of some harmless messages, it was just allegedly reported about tutors in a foreign language. And in fact, an employee was transferring data on VIP clients to the side in such short messages. How was this data then used? The client was called, introduced themselves, offered more favorable terms for banking products. And in the end, it ended with the client transferring the maintenance of these assets to another bank with some very serious financial asset. It is very difficult to catch such a leak in the classic DLP design. Because how are we going to catch the last name, first name and phone number? We will create a regular expression for the last name and phone number, then we will have LPSs, like in this slide, for example, for any signature in the letter. You can't create dictionaries either. Given that there may be hundreds of thousands of these personal data, if we are talking about a bank, and a million data. It turns out that we cannot classify such a message in any way. If we, for example, try to catch any message with DLP where such combinations as a phone number, last name and first name are mentioned, this will lead to huge false positives. We will be tormented to sort out such events later. Plus, there is another separate problem. Let's imagine such a situation that everything is set up so that the system reacts to any mention of personal data, to a phone number, to a mention of the last name, first name, then the security officer, looking at the intercepted events, at a potential incident with such text, will never understand whether this is a leak or not, unless he remembers all the contact details of the bank's clients by heart.

For example, the message will say: "Hello! I'm giving you the details of a French tutor." How can a security officer determine that this is a leak? It's another matter when a really obvious leak is merged in photos, some Excel table or some report where you can see that last names, addresses, phone numbers are listed. This is clear. And any DLP will easily react to this. And the security officer, looking at such an array of data, will understand that this is some kind of database set. And when such a small message, neither DLP nor the security officer can identify it as a leak.

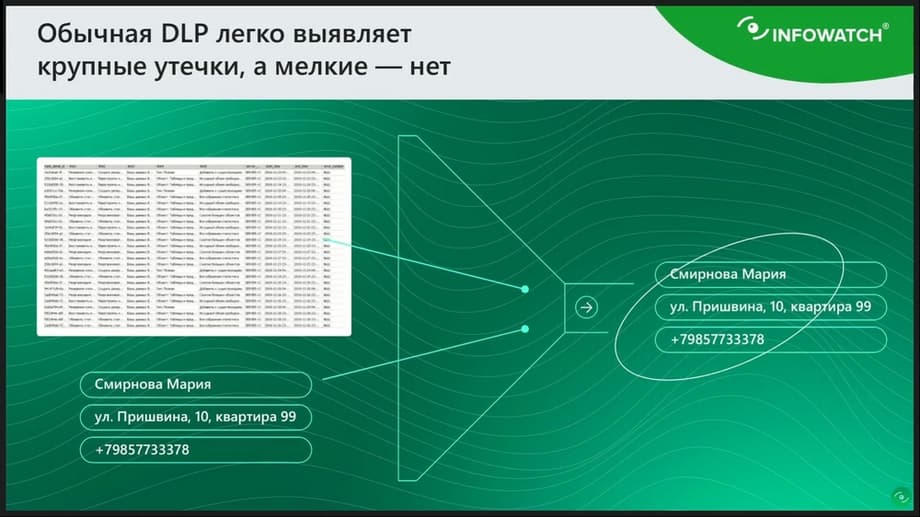

In fact, things are even worse. I have already said that DLP determines mass leaks well through a filter, does not allow a table to be merged.

But it cannot determine one small message that is indistinguishable from any signature. It is very difficult to catch such a small leak. But, nevertheless, even such a small leak can bring a direct financial loss, which we may not even know about. These are not those big leaks where some database was merged, somewhere it surfaced in the media, somehow this leak was made specifically to compromise, discredit the company. And this is a small secret leak that was somehow used, and as a result we don't know anything about it. Neither the system knows anything about it, nor the person.

But our problem is even worse. The problem is that in almost any company there are processes where the transfer of personal data, including outside the company's perimeter, is an absolutely legitimate process. There are always such processes when some personal data is transferred outside the company's perimeter. These can be, for example, things like email newsletters, where financial organizations offer customers some personal products, some personal offers. And that's where they send out some information, that's where everything is transferred. But personal data is also often transferred within the company. These can be salary slips and DMS policies, a lot of personal data is transferred both inside the company and outside its perimeter. I say this to the fact that it will not be possible to tighten the screws so much that it is impossible to transfer any personal data to anyone and anywhere. There are a lot of legitimate processes where personal data is transferred.

And here is an even more difficult task. What to do if we need to determine that a portion of personal data is transferred to its owner.

That, for example, a personal offer from a bank client is transferred to the bank client. That the personal sensitive data of an employee is transferred to a specific employee. How to solve this problem, that not just a security officer sees the information, and must understand that the data is transferred, for example, to a bank client. Here he sees, for example, a message: "Dear Ivan Ivanovich, we want to offer you such and such changes for such and such products." And he sees some email of personal mail, for example, Alex.34@gmail.com. How can a security officer determine that this is exactly the client's email to which the appeal is going? This is impossible. How could this be done in theory in a DLP system?

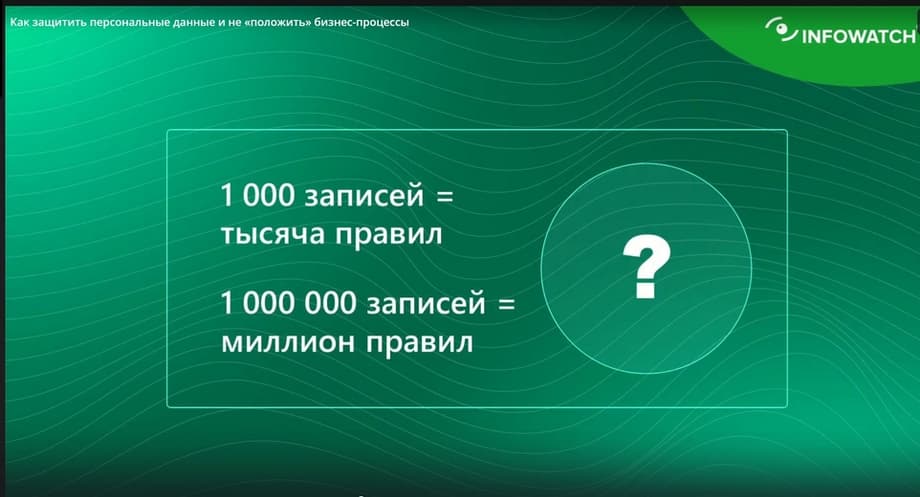

In the DLP system, in its classical version, we would have to create, for example, 1000 rules for 1000 portions of personal data. A specific portion of personal data - a specific recipient. A specific portion - a specific recipient. But, if you have a million clients, the task is again unsolvable.

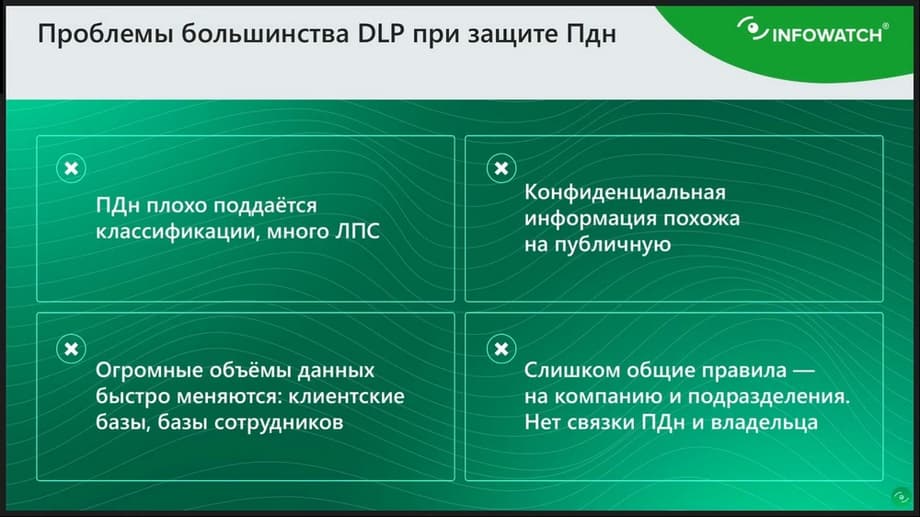

Let's summarize what problems we have with the protection of personal data?

Personal data is poorly classified. Because all personal data is often named data. This is a specific phone number, specific full name, specific address, etc. That is, when we talk about protecting personal data, it is important for us not to catch some phone number, some address. We need to catch a specific address, a specific phone number, a specific mention of a person. This is the main problem of personal data protection. Here is such a block: confidential information similar to public. This is a continuation of the same problem that it is difficult to distinguish sensitive personal data from public data.

The second problem is huge amounts of data. This is small data that can be calculated in millions of records. And the third fundamental problem is that DLP, in its classical design, has too general rules. It is impossible to granularly configure a specific rule for a specific portion of data for a specific recipient in order to determine that personal data is received by its owner. That is, theoretically it is possible, but you will have to create hundreds of thousands of rules, and keep it all up to date. Therefore, it is very difficult to implement it in the paradigm that we are all used to.

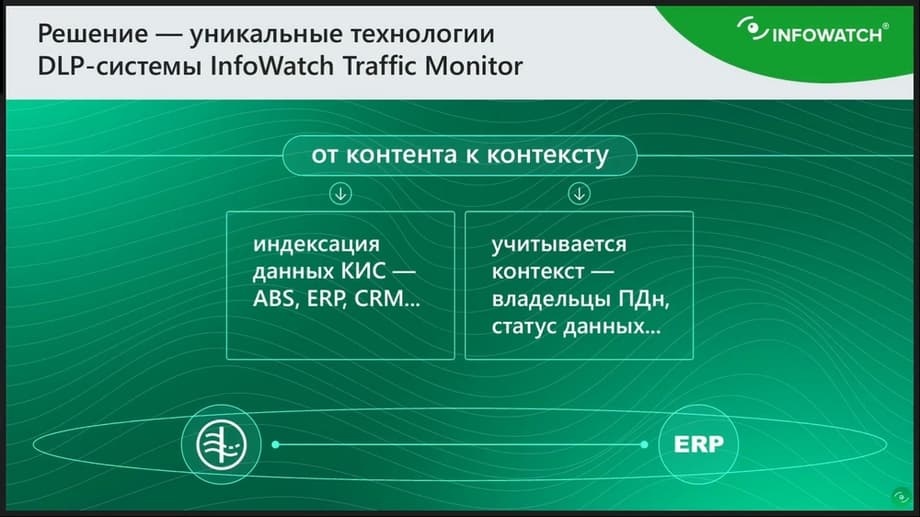

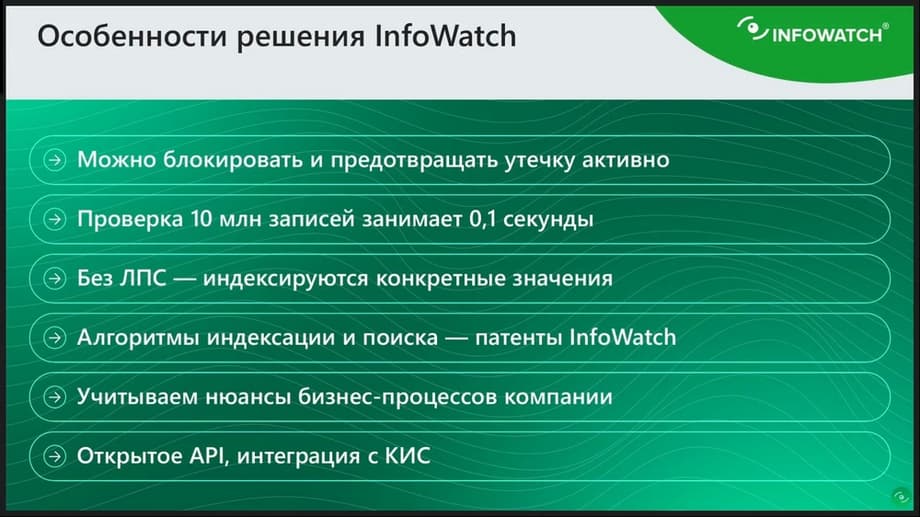

Therefore, based on these problems, InfoWatch created a technology that proposes to move from analyzing content that somehow classifies personal data: phone numbers, account numbers, full names, addresses, from content with determining what this data is, to context, that is, understanding that this is a specific portion of personal data that has a specific owner, this data has a specific start.

The transition to a granular definition of the values of personal data for each owner, i.e. from content to context. This is achieved by indexing data from corporate information systems. That is, in this case, the DLP system - traffic - monitor integrates with corporate ERP or CRM, or even an electronic document management system, extracts personal data from there, indexes it. And, to put it simply, it creates an automatic policy that includes and may include hundreds of thousands of rules, millions of rules. This policy does not need to be maintained manually in an up-to-date state. Data is indexed from corporate information systems and protected.

I suggest considering a specific example. It was implemented using this technology.

There is a bank with 20 thousand employees. This example will focus on protecting the personal data of these employees. It is necessary to protect any data of employees. These can be voluntary health insurance policies, contracts, salary slips. Protection is needed as follows: so that data on an employee is sent strictly only to the employee and to no one else. That is, for example, if Ivanov's DMS policy accidentally goes to Petrov, then this is already a violation. But at the same time, there is a nuance, you need to make sure that the employee manages his personal data himself as he wants, and we should not limit him. And such an important point in this task that we solved is that if the data is sent to the wrong employee, then such letters, such corporate communication should be blocked. That is, it turns out that if the personnel department sends a DMS policy to an employee, the owner of this policy, then this is normal. If, for example, then the employee takes and forwards this DMS policy to his personal mail, that is, disposes of his personal data as he wants, forwards it to his personal mail or sends this policy to his wife, this is also not considered a violation, because he disposes of it himself.

How was this implemented using this technology? There was integration with the DLP system. All data for each employee was pulled from there, data by which this employee can be identified. And plus, the email that was specified in the employee's card was pulled out. How was protection implemented? A specific portion of personal data was determined using an automatic policy. And the actual recipient of this personal data and the email that was indexed from the employee's card were compared. And if the actual email did not match the indexed email from the DLP system, then this was considered a violation. There is no need to maintain this policy manually. It is constantly automatically updated ten times a day. Synchronization took place. Granular protection of employees' personal data was carried out. In this case, we solved such an elementary task as sending out salary slips. Because before that, the DLP system became red twice a month, provoked by the distribution of salary slips, because the system considered that this was financial information, a leak, and could not determine who receives this salary slip, the owner or not the owner.

Question. What if it is sent not to the mail, but through the messenger?

Answer. You need to determine, in our practice, if it is sent to the messenger, it is unlikely that there is such a long process. It is unlikely that HR sends to the messenger. In this scenario, sending all personal data, as regulated only through corporate mail. If we, of course, allow the moment that personal data is sent to us, I do not understand how, through all channels, then, of course, this DLP in this case will be like some kind of wall meter by meter in a clean field. Therefore, we are talking about the correct organization of channels, it is necessary to prescribe this in the local legal acts of the company that all personal data, all sensitive information about the employee is sent only through this corporate channel.

Question. Should I take into account the direction of message transmission, when can I filter out forwarding within the organization?

Answer. But again, if HR accidentally sent it to the same employee, then we will not catch such a leak. Therefore, the direction, unfortunately, will not help solve such a problem of granular protection of personal data.

Question. And if just access to the messenger of this organization, how does the system take into account such events?

Answer. If you decide that this is a regulated channel, then there are no problems. And it doesn't matter what channel. The technology works on any communication channel. There is no difference. If we are talking about mail, then it is implied that the technology is worked out on any channel.

Question. What if the payrolls are packed in archives with a password? The name of the archive is depersonalized, just a "hashbook" of numbers.

Answer. Look, if you are allowed to send password information inside the company, then, of course, no DLP will help you. If, for example, you have these passwords for each employee individually, then, perhaps, you do not have such a problem. That is, the employee knows that this password is only with him, then, of course, this will be relevant.

Question. Here Andrei asks how to implement protection if the insurance company itself sends out DMS to everyone?

Answer. Then you need to ask the insurance company to implement DLP. If it individually sends out DMS, then, of course, the insurance company in this case is a point of failure. This is solved like this. We take, index also from the RIP data of clients and control incoming mail. If there is incoming mail, you can even prescribe from a specific domain of this insurance company, there is a policy, and we identify this policy as a certain policy of a certain employee, and the actual recipient of the employee does not match on incoming mail, then we can somehow, therefore, stop this letter. That is, yes, you can solve the problem not by implementing DLP in the insurance company, but within your internal DLP.

Question. In this case, do I need to create a list of legitimate emails in advance so that it works normally?

Answer. Yes. Here, the calculation is that when we protect granular data protection, a specific portion of personal data, then, of course, we must take this legitimate email from somewhere. Here we took it from the DLP system. Because not only often, but always in such systems, the employee's contact email is indicated. We are even a little doomed to success here, because such data is always stored in corporate systems.

Question. Are there any tools that protect against photographing the screen? Answer. There is a tool that allows you to supposedly see that an employee is pointing a camera on the phone at the monitor screen, or is pointing his smartphone at the screen. But, as practice has shown, this is not very effective. He, unfortunately, does not know how to do this very well. But when we talk about photographing the screen, especially when we do not have any cameras, then the question is that you need to protect not data leaks, but you need to control what data the employee presents in his workplace. I hope I answered the questions. Let's talk about the details now. I repeat, the case is implemented. In the bank, 20 thousand employees. Data about an employee cannot be sent to anyone else, only to himself. And the employee can already dispose of this data as he wants. This automatic policy checks that if the sender of this personal data is the owner of the personal data, then he does not need to be restricted in any way. That is, the automatic policy implements two automatic rules: that the recipient of personal data corresponds to the owner of personal data and that the sender of personal data corresponds to the owner.

Now let's analyze more details.

This whole case was solved taking into account blocking, that is, no deferred processing. They intercepted the letter, realized that the actual recipient and the owner of the personal data do not correspond to each other, and immediately blocked it. All this is done very quickly. There is such an indicator, we often talk about it, that checking any message, even a small one, in messengers, comparing this message with a ten-million database of personal data takes no more than one tenth of a second. We can check each message, each letter, whether there is a mention of specific personal data from some database. I repeat, because we record this data from the systems where this data is stored.

Illustrations provided by the press service InfoWatch

Illustrations provided by the press service InfoWatch

provided by the press service InfoWatch

Now on home